AI Content Detection Flaw: Cat to Catch Itself

Your professional writing is being flagged as AI. The tool doing the flagging is AI. Welcome to the most ironic loop in modern content.

Exploring the deep irony and fundamental flaws of AI content detection tools. Why do they punish quality writing and create a logical paradox? Learn a smarter path forward for writers and marketers.

Table of Contents

You’ve just poured your expertise into a document. The arguments are razor-sharp, the structure is flawless, and the prose is so clean it could have been written by a… well, that’s the problem, isn’t it?

You submit your work, proud of its professional sheen. Hours later, the email arrives. The client is concerned. The editor is questioning your integrity. The AI content detection tool has flagged your work. The verdict: “100% AI-Generated.”

Your reward for a lifetime of learning to write clearly is to be told your work looks synthetic. You are a victim of the modern-day witch hunt, where the tool designed to find the witch is, itself, a witch. We are, quite literally, asking a cat to catch itself.

From Revolution to Paradox

This isn’t my first time at the AI rodeo. I’ve written extensively about its transformative power, from how it’s engineering CRISPR breakthroughs to the moment AlphaFold revolutionized medicine. I’ve analyzed it as the revolutionary force transforming research and writing.

I am not a sceptic. I am an advocate for its potential. But precisely because I understand its capabilities and limitations, I can also see the profound irony of the system we’ve built to police it. This isn’t an argument against AI; it’s an argument against a flawed and illogical response to it.

The Fatal Flaw in AI Content Detection

This self-referential loop is not just a philosophical idea; it’s the actual architectural flaw of AI content detection tools, as the data below shows.

Let’s unravel the paradox. AI content detection tools like ZeroGPT or Copyleaks are not mystical truth oracles. They are, themselves, artificial intelligence. They are machine learning models trained on a dataset of text, learning to identify statistical patterns—like “perplexity” (how surprising a word choice is) and “burstiness” (the variation in sentence structure)—that differentiate a sample of human-written text from a sample of AI-generated text.

And herein lies the fatal flaw: they are a product of the very thing they are meant to detect. This creates a closed, circular logic system. It’s an AI, trained on a binary of human vs. AI, trying to police the output of another AI that was, in turn, trained on human text to emulate it. The cat is not only judging the cat; it invented the concept of “catness.”

It is the digital equivalent of using fire to protect oneself from fire.

The Punishment for Perfection

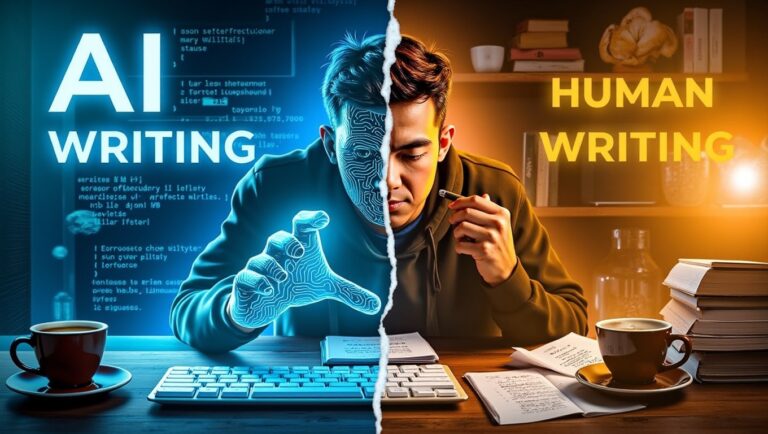

The most damning indictment of this system is its tragic irony: it frequently flags high-quality human writing as AI-generated.

Why? Because the hallmarks of “good” writing are often the same hallmarks these AI content detection gizmos have been trained to flag. AI models like ChatGPT are optimized to produce clear, grammatically impeccable, and predictable text—the very goal of any professional writer.

Therefore, when a skilled professional produces a well-structured, flawless article, it ticks all the wrong boxes for the detector: low perplexity, high predictability. The tool, designed to find AI, instead finds competence and punishes it. This is the ultimate absurdity I warned about in AI vs Human Writing: a failure to understand what truly gives writing its human value.

This creates a perverse incentive to “dumb down” writing, to intentionally inject errors and awkwardness to seem more “human”—a race to the bottom that serves no one, least of all the reader who demanded quality in the first place. The mother of all ironies: those who demand professional work reject it for being too professional.

The Unreliable Science of AI Content Detection

While the promise of AI content detection is alluring, its reliability is shockingly low. The core of the problem lies in the misleading “confidence score” these tools output. Independent data underscores significant variability and error margins, revealing a high rate of false positives—human-written text incorrectly flagged as AI-generated content.

For instance, while GPTZero can accurately flag 91–100% of purely AI-written essays, it still mislabels a substantial number of genuine human essays [Source:arXiv]. The reality for many tools is even starker. ZeroGPT once claimed ~98% accuracy, but real-world tests report success rates as low as 35–65%, incorrectly labeling 20–80% of human-written text in the process [Source: HIX AI]. In contrast, Copyleaks reports over 99% accuracy with an extremely low 0.2% false-positive rate, reflecting a broad and confusing spread in accuracy metrics across the industry.

This inconsistency isn’t a minor glitch; it’s a fundamental flaw in the underlying technology. The AI content detection systems struggle to differentiate between “high-quality” and “AI-generated” writing, ultimately punishing precision, clarity, and formal language. This means content from non-native English speakers, academic abstracts, and even passages from classic literature are frequently misidentified. The consequence is that no tool is 100% reliable, and false positives are a common, damaging occurrence.

We are asked to trust a flawed AI system to protect us from the flaws of other AI systems—a modern-day parable of using fire to fight fire.

How We Fuel the Fire: Blind Faith and Hallucinations

This scepticism didn’t emerge from a vacuum. It was earned. For every writer unfairly flagged, there is another user blindly prompting an AI, accepting its first draft—hallucinations, fabrications, and all—and publishing it without a second thought. This blind usage is the genuine problem. It floods the web with confident, authoritative nonsense, eroding trust in everything we read online.

AI tools like GPT-3.5 and GPT-4 are notorious for generating inaccurate or entirely fabricated information, including false citations and sources that do not exist—a phenomenon known as “hallucination”. The sceptics are right to be wary of this. But in their panic, they’ve deployed a broken smoke alarm that goes off every time someone cooks a gourmet meal.

The High-Stakes Impact on SEO and Publishing

This unreliability has real-world consequences, making AI content detection mission-critical in high-stakes industries. In SEO and publishing, Google’s algorithms have begun penalizing sites for “massive AI-generated content.” A March 2024 update caused dramatic ranking collapses for sites relying on undisclosed AI-written text, an “uncontrolled risk” given how easily detectors can spot such material. [Source: Search Logistics]

This has forced the industry to adapt. Surveys show 75% of news organizations now use AI somewhere in their workflow. Editors feed suspicious drafts into detectors not as definitive proof, but as a flag for human review to mitigate risks like bias or misinformation. The goal isn’t to ban AI but to ensure a human conductor is guiding the process, maintaining the critical scrutiny that purely AI-generated news can lack. [Source: The Washington Post, Originality.ai]

The Ethical Peril in Education and Beyond

Nowhere are the ethical implications more severe than in education. Teachers increasingly rely on detectors to catch AI-assisted essays, but experts stress that no detector is infallible. Technology reports show even well-known tools like Turnitin misidentify about 4% of writing at the sentence level, and similar false positives have led to innocent students being wrongly accused of cheating. [Source: The Washington Post]

This creates a profound ethical dilemma. As Copyleaks itself cautions, even a 0.2% false-positive rate “can damage careers and academic achievements.” AI content detection tools may inadvertently encode bias, where formal, technical, or non-native English writing is misjudged as AI-generated. The chilling effect is real: students might avoid legitimate AI-assisted learning or even intentionally “dumb down” their writing with errors to evade detection, the ultimate irony in the pursuit of academic integrity.

The responsible path forward, echoed by educators and publishers alike, is to use these scores as a single data point to prompt conversations—not as an unchallengeable judgment that dictates outcomes. This safeguards against the severe consequences of false accusations while embracing the need for transparency in the age of AI.

A Better Path Forward: The Conductor, Not the Instrument

It’s time to move beyond this illogical war. The question must shift from “Who or what made this?” (an unanswerable and often irrelevant question) to “Is this content valuable, accurate, and effective?”

This requires a new framework, one of intelligent assistance, not blind generation:

- Value Human Oversight, Not Just Human Origin: The label should shift from “AI-Generated” to “AI-Assisted” or “Human-Curated.” The value is in the human guiding the process: providing the strategy, the insight, the fact-checking, and the final approval. The human is the conductor, not just the musician.

- Audit the Process, Not Just the Product: Trust should be built on transparency of process. How do your writers use these tools? Are they thinking critically? Are they verifying claims? This is a more meaningful conversation than running a flawed AI content detection aid.

- Prioritize Performance Metrics: Judge content by what it actually does. Track its engagement, its SEO performance, its conversion rates. Great content, regardless of its origin, will perform. Bad content will fail. Let outcomes be the ultimate judge.

Conclusion: Stop Asking the Cat

Asking an AI for AI content detection is a logical folly, a snake eating its own tail. In our pursuit of an unattainable purity test, we are creating a system that misunderstands both technology and the very nature of quality writing.

The future isn’t about building better mousetraps to catch synthetic text. It’s about raising our standards for what makes content worthwhile. It’s time to stop worrying about how the sausage is made and start focusing on how it tastes. Let’s end the paradoxical hunt and embrace a smarter, more outcome-focused approach to the content we create.

The tools are here. We can use them wisely, as I’ve shown in my previous work, or we can use them to foolishly chase our own tails. The choice is ours. Let’s choose intelligence over irony.

FAQ: Unraveling the AI Detection Paradox

-

If AI detectors are so flawed, what should I use instead to check for AI content?

The best tool is an informed human mind. Shift your focus from detection to evaluation. Look for hallmarks of expertise: unique personal insights, up-to-date and specific sources, nuanced understanding of complex topics, and yes, even the beautiful imperfection of a creative analogy. Use AI detectors only as a flag for deeper review — never as definitive proof.

-

Can AI content detection falsely flag human writing?

Absolutely. Polished, professional writing often mirrors the statistical “predictability” detectors are trained to look for. This leads to false positives where skilled human work is mislabeled as synthetic. Formal tone, structured arguments, or even just clear grammar can trigger AI-like scores. That’s why detectors should prompt conversation, not condemnation.

-

But my university or company mandates the use of AI detectors. What can I do?

Your best defense is knowledge and transparency. If flagged, explain your writing and research process, share drafts and sources, and cite evidence that detectors frequently mislabel formal work. You’re not only defending yourself — you’re educating the institution about the limitations of the tools they’ve chosen to rely on.

-

Isn’t any detection better than no detection at all?

Not when the cost of being wrong is so high. A faulty smoke alarm that rings every time you cook isn’t safety — it’s noise. Over time, people stop trusting it, and the real danger gets ignored. Similarly, widespread false accusations from AI detectors erode trust, punish competence, and create a culture of fear that does more harm than the problem itself.

-

How can I use AI ethically as a writer without getting flagged?

Use AI as an assistant, not the author. It works best for brainstorming, summarizing complex topics, or polishing grammar. But the final product should come from your expertise, critical thinking, and unique voice. This “human in the loop” approach not only produces higher-quality work but also minimizes the risk of being wrongly flagged.

-

Will Google penalize AI-generated content detected by AI content detection tools?

No — Google has made clear that it values usefulness and quality, not whether content was typed by human fingers or assisted by AI. Poorly written or unverified AI text may struggle, but AI-assisted, fact-checked, and insightful content performs well. Focus on value, not beating detectors.

-

Why does AI content detection matter to people who don’t write for a living?

This issue is bigger than writing. It mirrors how society risks blindly trusting flawed algorithms in education, hiring, finance, and even policing. Students get accused of cheating, applicants are screened out by bots, and citizens face biased automated systems. Questioning AI detectors is practice for questioning algorithmic decisions everywhere.

🤖 Meta-Irony Disclosure: This article was researched, structured, and polished with the help of several AI tools — and the images you see were generated by AI image creators. You are welcome to run this through any AI content detection gizmo to verify whether it’s human-made or AI-generated (we have no legal objections at all!).

For those who are still afraid of asking AI to create such content but secretly hope to copy it — we see you. 😂🤣🤪😁

The point isn’t the tool; it’s the craftsman wielding it.

3 Comments